Previously

In my previous blog I wrote about the Azure Data Factory (ADF) and how to Extract and Load multiple tables and views at once, using the ELT methode, which is mostly used when working with data platforms in the Cloud.

Today, let's look at a methode on how to insert data directly into a SQL Stored Procedure for those moments you might need to validate things first or want to do some small ETL.

Azure SQL Database

While this is not limited to Azure SQL Database only, I am going to use one for this purpose since it would respond with the database for our data platform. We are going to use the set-up from the previous blog and continue upon this.

In the target database we need 2 objects for this to work. One will be a User Defined Table (UDT) and the other one is a Stored Procedure (SP). The UDT will be a clone of the Stg.SalesOrderHeader and in the SP we will do a small transformation for data cleansing.

The SP also contains a parameter called @Deltatable. This parameter will call up the UDT and so we can use the table within the SP logic itself.

For this, the following code could be used:

CREATE TYPE [stg].[UDT_SalesOrderHeader] AS TABLE

(

[SalesOrderID] [int] NOT NULL,

[RevisionNumber] [tinyint] NOT NULL,

[OrderDate] [datetime] NOT NULL,

[DueDate] [datetime] NOT NULL,

[ShipDate] [datetime] NULL,

[Status] [tinyint] NOT NULL,

[OnlineOrderFlag] [bit] NOT NULL,

[SalesOrderNumber] [nvarchar](23) NOT NULL,

[PurchaseOrderNumber] [nvarchar](23) NULL,

[AccountNumber] [nvarchar](23) NULL,

[CustomerID] [int] NOT NULL,

[ShipToAddressID] [int] NULL,

[BillToAddressID] [int] NULL,

[ShipMethod] [nvarchar](50) NOT NULL,

[CreditCardApprovalCode] [varchar](15) NULL,

[SubTotal] [money] NOT NULL,

[TaxAmt] [money] NOT NULL,

[Freight] [money] NOT NULL,

[TotalDue] [money] NOT NULL,

[Comment] [nvarchar](max) NULL,

[rowguid] [uniqueidentifier] NOT NULL,

[ModifiedDate] [datetime] NOT NULL,

PRIMARY KEY (

SalesOrderID

),

INDEX IX_OnlineOrderFlag (

[OnlineOrderFlag]

)

)

GO

CREATE PROCEDURE [stg].[USP_DL_SalesOrderHeader]

@Deltatable [stg].[UDT_SalesOrderHeader] READONLY

AS

BEGIN

MERGE INTO [Stg].[SalesOrderHeader] WITH (TABLOCK) AS t

USING

(SELECT

[SalesOrderID],

[RevisionNumber],

[OrderDate],

[DueDate],

[ShipDate],

[Status],

[OnlineOrderFlag],

[SalesOrderNumber],

[PurchaseOrderNumber],

[AccountNumber],

[CustomerID],

[ShipToAddressID],

[BillToAddressID],

[ShipMethod],

[CreditCardApprovalCode],

[SubTotal],

[TaxAmt],

[Freight],

[TotalDue],

CASE

WHEN [Comment] IS NULL AND [OnlineOrderFlag] = 0 THEN 'Store order'

WHEN [Comment] IS NULL AND [OnlineOrderFlag] = 1 THEN 'Online order'

ELSE [Comment]

END [Comment],

[rowguid],

[ModifiedDate]

FROM @Deltatable

) AS s

ON ( s.[SalesOrderID] = t.[SalesOrderID] )

WHEN NOT MATCHED BY TARGET

THEN INSERT (

[SalesOrderID],

[RevisionNumber],

[OrderDate],

[DueDate],

[ShipDate],

[Status],

[OnlineOrderFlag],

[SalesOrderNumber],

[PurchaseOrderNumber],

[AccountNumber],

[CustomerID],

[ShipToAddressID],

[BillToAddressID],

[ShipMethod],

[CreditCardApprovalCode],

[SubTotal],

[TaxAmt],

[Freight],

[TotalDue],

[Comment],

[rowguid],

[ModifiedDate]

)

VALUES (

[SalesOrderID],

[RevisionNumber],

[OrderDate],

[DueDate],

[ShipDate],

[Status],

[OnlineOrderFlag],

[SalesOrderNumber],

[PurchaseOrderNumber],

[AccountNumber],

[CustomerID],

[ShipToAddressID],

[BillToAddressID],

[ShipMethod],

[CreditCardApprovalCode],

[SubTotal],

[TaxAmt],

[Freight],

[TotalDue],

[Comment],

[rowguid],

[ModifiedDate]

);

END;

GOAzure Data Factory

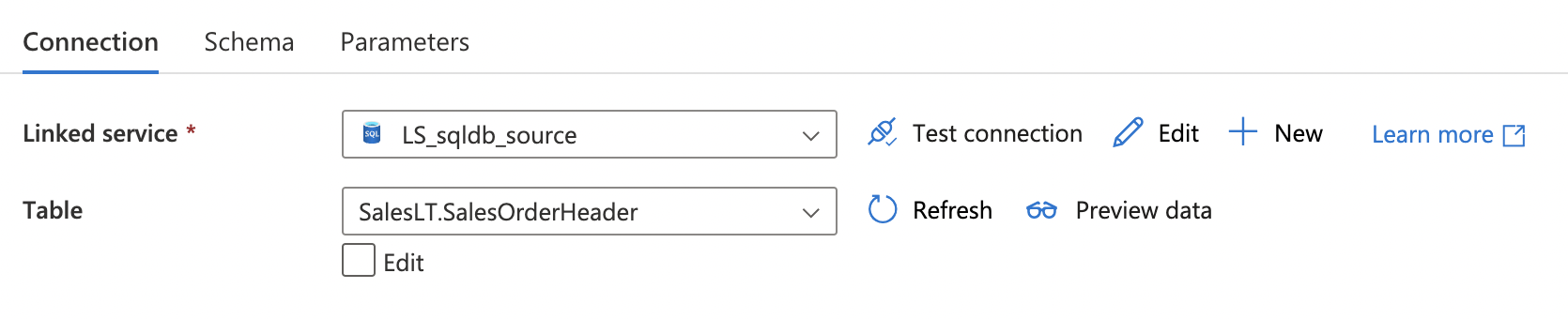

For this we are going to make some new datasets, again for the source and target databases as we did in the previous blog. For the source database, we make the following dataset:

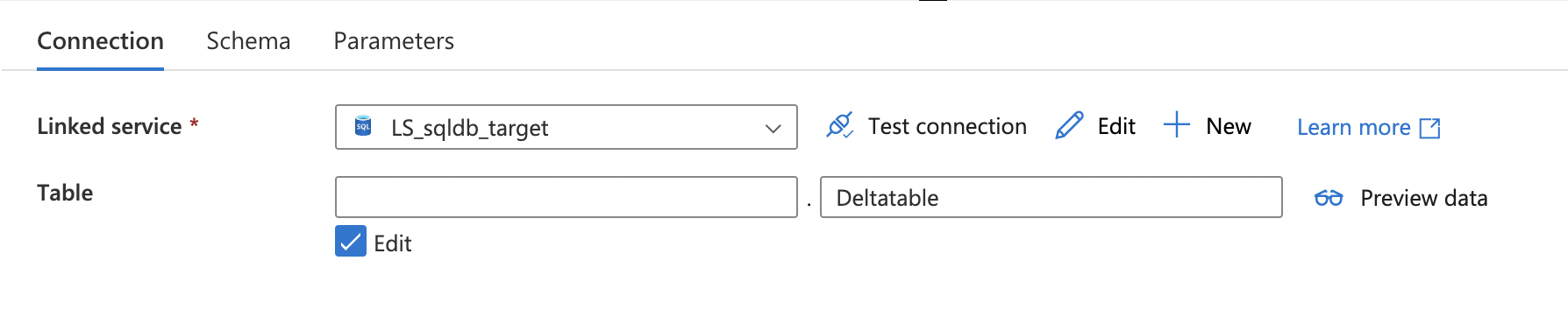

Now we make a dataset for the target database. It is important to specify the parameter name from the SP within it. In this case add Deltatable to the second field. NOTE: the Preview data option will not work using the dataset like this.

Create a new pipeline and give it a logical name, I used PL_Copy_UDT_SP for this example. After creating it, open the Move & Transform category, followed by clicking on the Copy Data component and dragging and dropping it onto the canvas.

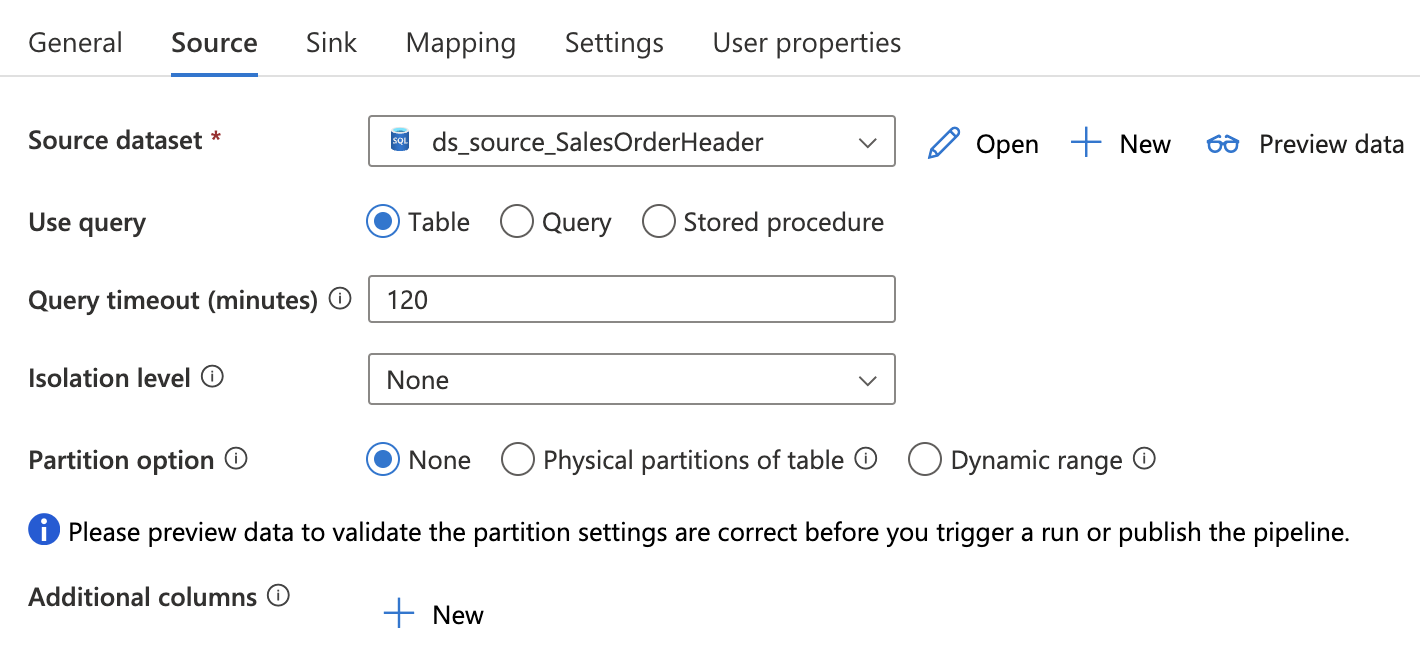

Click on the Source Tab and select the new dataset from the source database we just created.

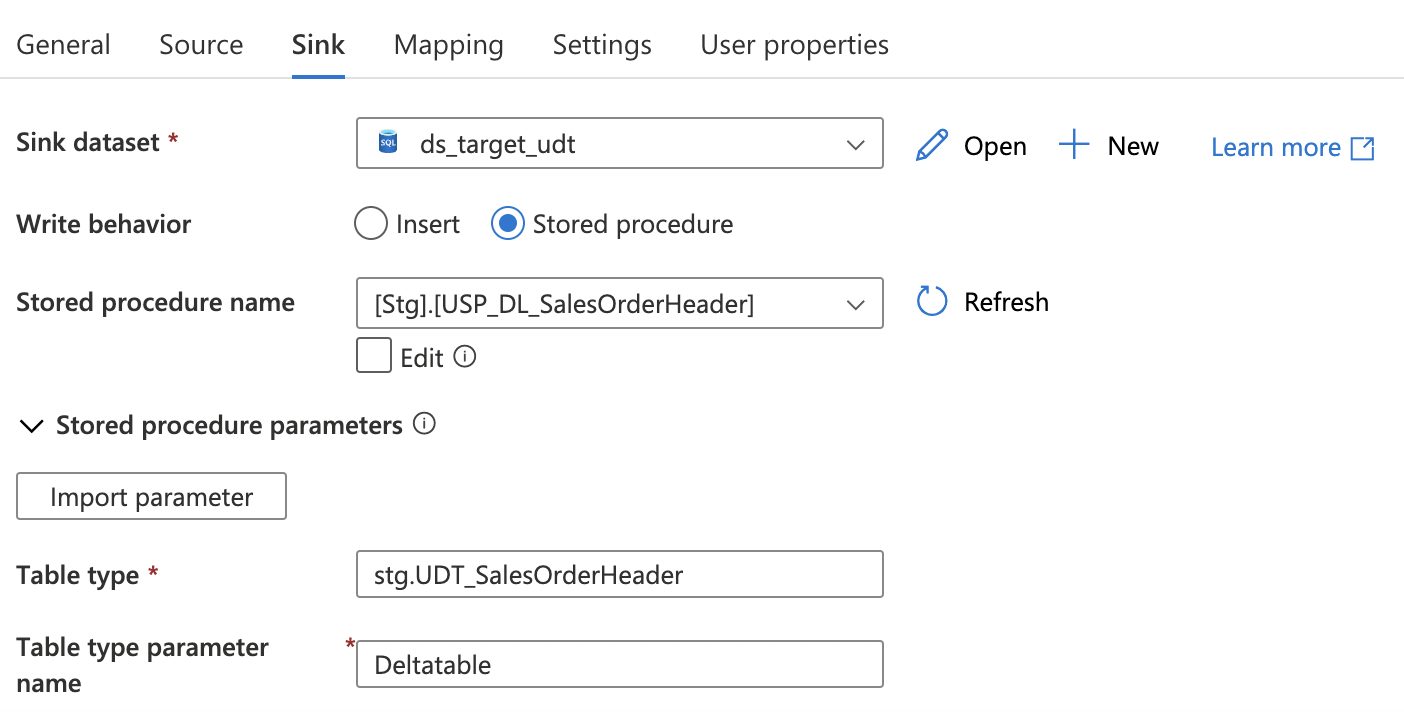

Next we go to the Sink Tab and select the Stored procedure option and select our created SP. Click on the Import parameter to get the parameters from the SP loaded. NOTE: Don't forget to add the schema name before the name of the Table Type, otherwise it wouldn't work. In this case it would be stg.

The pipeline should be done, don't forget to publish so everything is saved.

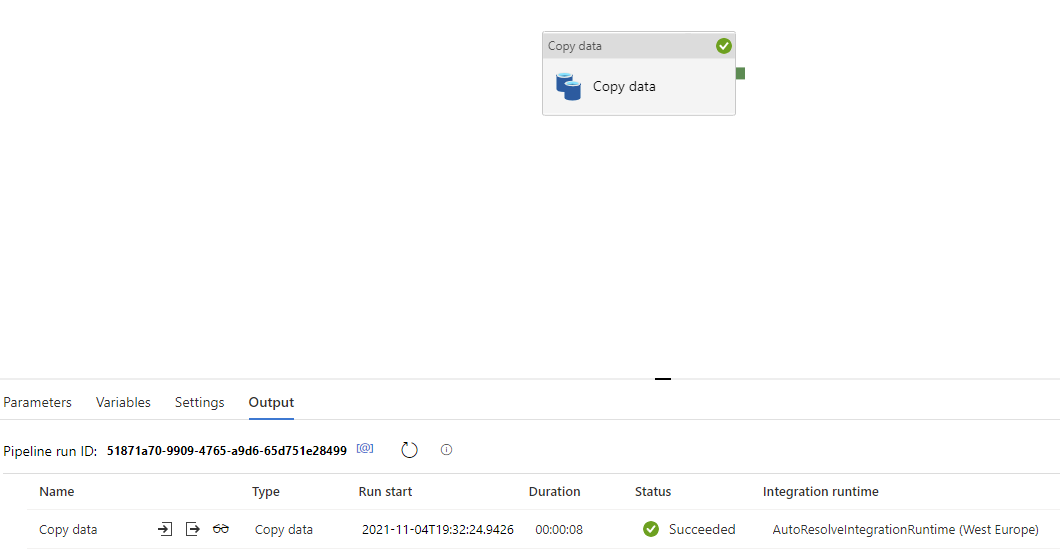

After publishing, click on the Debug button to test the pipeline. You can login to the target database and check for the results, it should now contain comments.

What's next?

Lately I have been busy with writing many .Bicep files for implementing services via Infrastructure as Code. When implementing a Bicep file via YAML, I noticed something a lot of other people do, but isn't necessary. So let's have a look at how to make this easier!