Previously

In my previous blog I talked about exporting deployment logs with Azure Logic Apps to a Azure Data lake, to make sure you keep them for compliancy or regulatory purposes, as well as to be able to create reports on them as is common for Data on a Data Lake. Today we will look at Log Analytics and how to export these logs as well.

As a best practise to Log Analytics Workspaces it is recommend to have a month of data available to you. This can be configured by having a retention policy of 30 days enabled (which is 31 days for most months), after this period it will start deleting data from your workspace, but that is also something you probably don’t want due to the need of keeping it for regulatory or compliancy purposes. To negate this, a process is needed to automatically archive these logs before they are deleted, so when they are needed you can restore them and query on its content.

Let's have a look on how we can setup such a process with Azure Logic Apps!

Requirements and configuration

Before we dive into the logic of the Logic App itself, we need to a Log Analytics workspace populated with data, an Azure Storage account/ Azure Data lake and a blanc Logic App.

If you want to know how the majority of these services are created, I welcome you to look at previous blogs in which this is explained.

In this case I will assume all 3 services are present, and we can further configure what is needed, starting with configuring the retention policy on Log Analytics.

Log Analytics

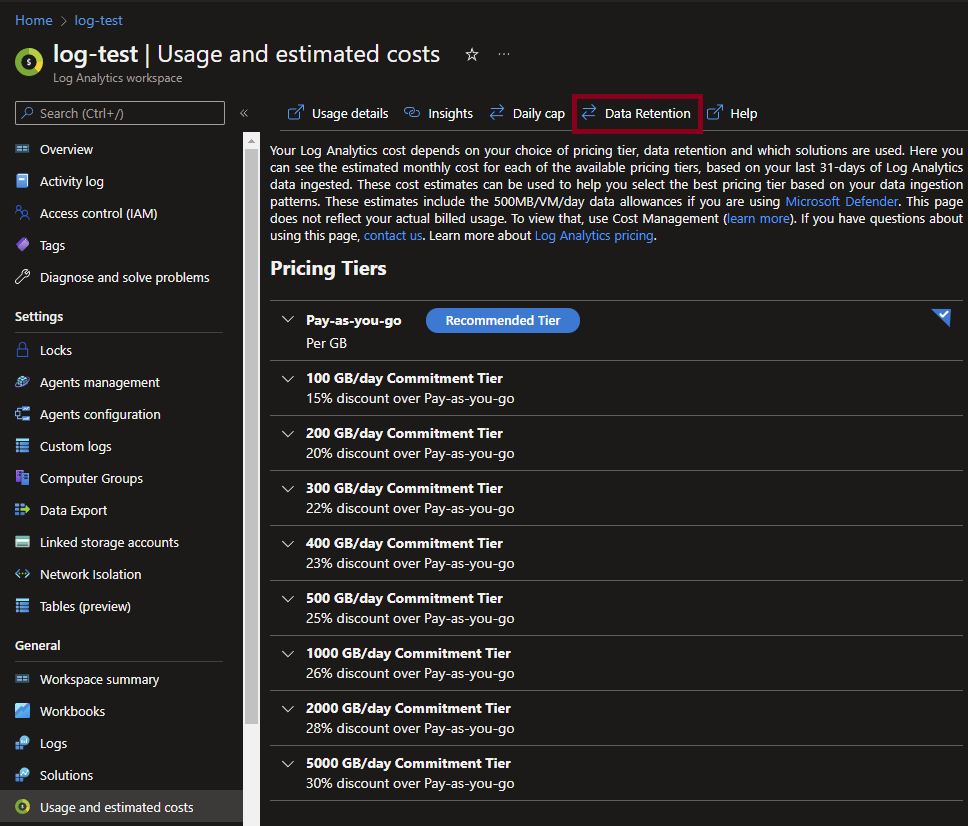

Open the Azure portal and navigate to your Log Analytics Workspace. When you are inside of the resource go to Usage and estimated costs under the General tab.

In the horizontal navigation you will see the option called: Data Retention, click on it.

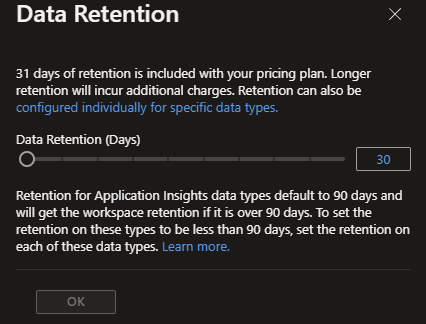

A new window we slide open and you have the ability to change the retention rate on your logs. By default this should already be 30 days, but if you have used Log Analytics before it might be configured differently. If it has been configured differently, leave it as it is for now, so no data loss with occur! We will come back to this on a later moment.

Enabling MSI and RBAC/ ACL

This is something I have shown before is previous blogs, to enable the MSI on your Azure Logic App you can have a look at this blog. When using a regular Azure Storage account with RBAC, check out this blog and when using an Azure Data Lake with ACL rights you can check this blog.

Creating the Logic App

Before we start looking at the logic of the Logic App itself, there are some limitations I will need to present to you, in case you have a lot of data within your Log Analytics. The Log Analytics connector that we will be using have a few limits and they are as followed:

- Log queries cannot return more than 500,000 rows.

- Log queries cannot return more than 64,000,000 bytes (61 MB).

- Log queries cannot run longer than 10 minutes by default.

- Log Analytics connector is limited to 100 call per minute.

To negate most of these limitations you can make an export on a daily or hourly basis. In my example I will look at a daily schedule.

NOTE: If you have gigabytes (> 1 GB) of logs per hour/ day, other steps or another solution itself might be needed.

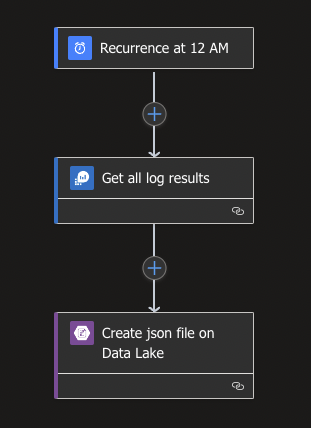

The Logic App itself is straightforward and exists out of 3 steps, 1 trigger and 2 actions, which will be executed per day and lookup the logs in a timeframe of 24 hours and save these logs as a JSON file to our Azure Data Lake.

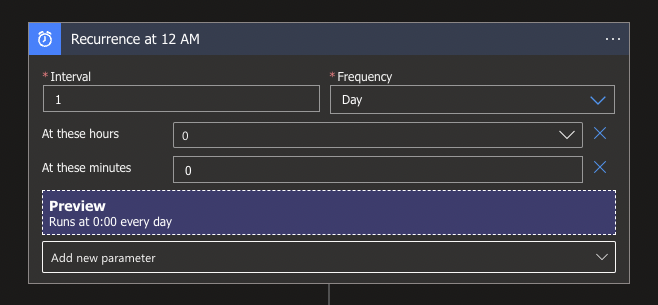

To start with look for the Schedule connector and select the Recurrence trigger. Since we will be running it once per day to get all logs, I opted for midnight (0:00 hours). If you want to add the option to specify a specific hour and minutes, you can do so by clicking on the Add new parameter and select the available parameters.

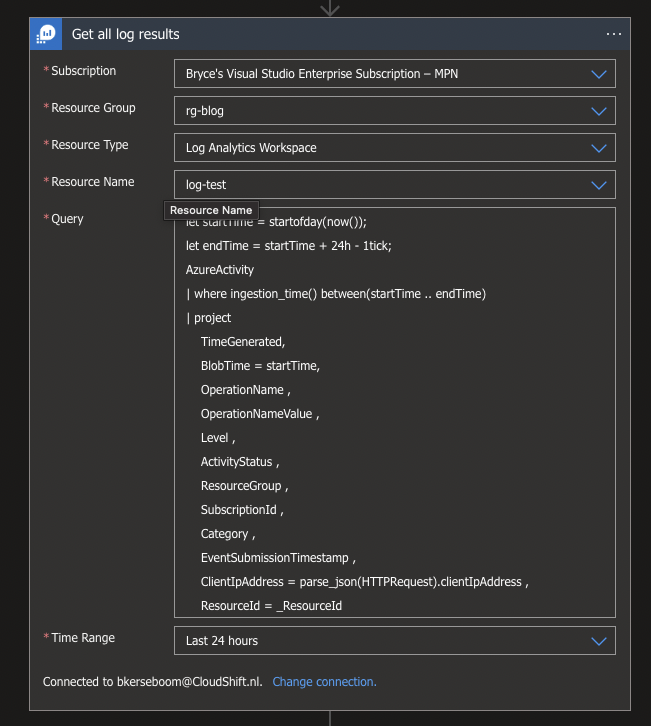

Now that we will be running it every night it is important to look at the logs of yesterday, so that everything from yesterday will we archived. To accomplish this we will use the Azure Monitor Logs Connector with the Run query and list results action. This action allows us to use Kusto Query Language (KQL) to retrieve our logs. The code below filters the logs on everything from yesterday:

let startTime = startofday(now());

let endTime = startTime + 24h - 1tick;

AzureActivity

| where ingestion_time() between(startTime .. endTime)

| project

TimeGenerated,

BlobTime = startTime,

OperationName ,

OperationNameValue ,

Level ,

ActivityStatus ,

ResourceGroup ,

SubscriptionId ,

Category ,

EventSubmissionTimestamp ,

ClientIpAddress = parse_json(HTTPRequest).clientIpAddress ,

ResourceId = _ResourceId But besides the filtering in the code itself, we also need to specify the Time Range in the connector itself, so the Last 24 hours option would be perfect in this use case.

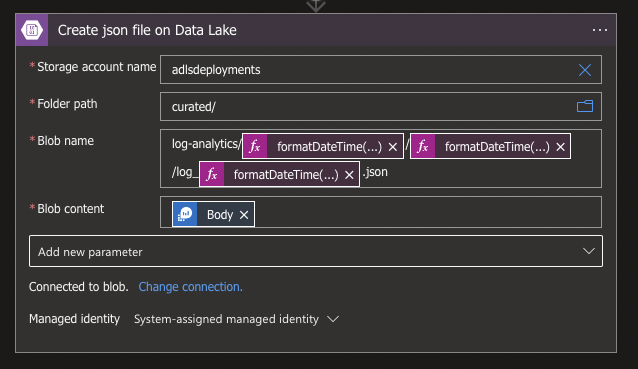

With the KQL query in place we will only need to create a JSON file on our Azure Data Lake. For this we will use the Azure Blob Storage Connector with the Create Blob (V2) action.

Fill in your Azure Storage account which is Azure Data Lake enabled, specify a Folder Path and Name for your file. In my case I used the following code to give it it's name:

log-analytics/@{formatDateTime(addDays(utcNow(),-1),'yyyy')}/@{formatDateTime(addDays(utcNow(),-1),'MM')}/log_@{formatDateTime(addDays(utcNow(),-1),'yyyyMMdd')}.jsonLast but not least we will have to add the Blob content which will contain the output of the KQL query. Use the Body property or the following code:

@{body('Get_all_log_results')}

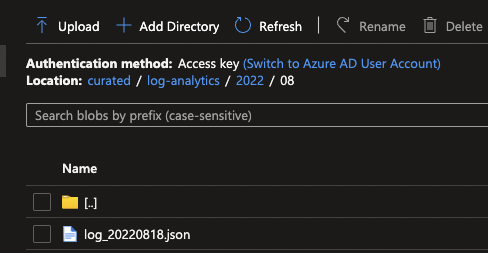

If everything is configured correctly and you run your Logic App, you should be able to see a new file in your Data Lake.

Now if your retention rate was higher then the default 30 days and you know your log size per day/ hour, you will be able to run it for all previous days, for which you need to adjust the variables in the KQL to these days. Afterwards when all exports are made, you should be able to reduce your retention rate in Log Analytics to the default and save some money in the process!

What’s next?

To stay a bit longer in the process of retention, we will check how we can make automated backups for Azure SQL databases by exporting BACPACs. While Azure SQL Databases to have a backup process in place by default, regulations and compliancy might sometimes demand otherwise. Stay tuned for next weeks blog!